Difference between revisions of "About"

| (One intermediate revision by the same user not shown) | |||

| Line 31: | Line 31: | ||

=== Structure of Concept Tree === | === Structure of Concept Tree === | ||

| − | [[http://corpus.quran.com/]] an annotated linguistic resource which shows the Arabic grammar, syntax and morphology for each word in the Holy Quran. | + | [[http://corpus.quran.com/ corpus.quran.com]] an annotated linguistic resource which shows the Arabic grammar, syntax and morphology for each word in the Holy Quran. |

=== Data Mining Tools === | === Data Mining Tools === | ||

| Line 114: | Line 114: | ||

=== This Wiki === | === This Wiki === | ||

| − | We are now building a sample web site based on | + | We are now building a sample web site based on Web 3.0 technology namely (MediaWiki) and incorporating the intelligence mentioned above using the built in Semantic Capabilities of MediaWiki. |

We have also successfully demonstrated and used the multi language capabilities using the built in features of MediaWiki. | We have also successfully demonstrated and used the multi language capabilities using the built in features of MediaWiki. | ||

Latest revision as of 09:46, 20 December 2018

About HODHOOD ISLAMIC ENCYCLOPEDIA

Sponsor

HodHood Project is a team effort initiated and maintained by AlHuda University in Houston, Texas. The project will build and maintain a platform for all Islamic Sciences, in as many languages as possible or there are authentic relevant material. At the early stage it will form a container for all written or published books, and eventually grow the project to translate contents.

Credit

listed below are all software and resources used in this web site. pls. let us know if we have violated the copy write or license information of any product.

Contents

Tanzil Project

Tanzil is a Quranic project launched in early 2007 to produce a highly verified Unicode Quran text to be used in Quranic websites and applications. Our mission in the Tanzil project is to produce a standard Unicode Quran text and serve as a reliable source for this standard text on the web. Tanzil Project The Following Text is a requirement of using the Tanzil.project resources.

This quran text is distributed under the terms of a Creative Commons Attribution 3.0 License.

Permission is granted to copy and distribute verbatim copies of this text, but CHANGING IT IS NOT ALLOWED.

This quran text can be used in any website or application, provided its source (Tanzil.net) is clearly indicated, and a link is made to http://tanzil.net to enable users to keep track of changes.

This copyright notice shall be included in all verbatim copies of the text, and shall be reproduced appropriately in all files derived from or containing substantial portion of this text.

Sunnah.com

The goal of this website is to provide the first online, authentic, searchable, and multilingual (English/Arabic at the individual hadith level) database of collections of hadith from our beloved Prophet Muhammad, peace and blessings be upon him.

Structure of Concept Tree

[corpus.quran.com] an annotated linguistic resource which shows the Arabic grammar, syntax and morphology for each word in the Holy Quran.

Data Mining Tools

Data Mining

The information in this site was produced using mining, Data mining is the process of discovering patterns in large data sets involving methods at the intersection of machine learning, statistics, and database systems. The following tools were used for data mining

The R Project for Statistical Computing

R is a free software environment for statistical computing and graphics. It compiles and runs on a wide variety of UNIX platforms, Windows and MacOS.

r Studio

RStudio is an active member of the R community. We believe free and open source data analysis software is a foundation for innovative and important work in science, education, and industry. The many customers who value our professional software capabilities help us contribute to this community.

r Shiny

Shiny is an R package that makes it easy to build interactive web apps straight from R. You can host standalone apps on a webpage or embed them in R Markdown documents or build dashboards. You can also extend your Shiny apps with CSS themes, htmlwidgets, and JavaScript actions.

Stanford CoreNLP – Natural language software

Stanford CoreNLP provides a set of human language technology tools. It can give the base forms of words, their parts of speech, whether they are names of companies, people, etc., normalize dates, times, and numeric quantities, mark up the structure of sentences in terms of phrases and syntactic dependencies, indicate which noun phrases refer to the same entities, indicate sentiment, extract particular or open-class relations between entity mentions, get the quotes people said, etc.

isri stemmer

The Information Science Research Institute’s (ISRI) Arabic stemmer shares many features with the Khoja stemmer. However, the main difference is that ISRI stemmer does not use root dictionary. Also, if a root is not found, ISRI stemmer returned normalized form, rather than returning the original unmodified word.

Thanks

we appreciate the contribution of the following professionals.

Web Scrapping

Abdelhak Mitidji

Data Mining Team

Hicham Moad SAFHI

Vladimir Kurnosov

Wikimedia and Presentation Team

Special thanks to

Felipe Schenone

Karuna Saxena

Pavel Astakhov

Translation and Editing Team

Status and Progress

HodHood Islamic Encyclopedia Project is a team effort that will build and maintain a platform for all Islamic Sciences, in as many languages as possible or there is authentic relevant material. At the early stage it will form a container for all written or published books, and eventually grow the project to translate contents. The main add-value to the e-library is using data mining techniques to facilitate a better access to Islamic material for our students specifically and the English speaking Muslim community in general.

Challenges

The conventional search engines are for the most part general purpose search engines and may not be a good source of authentic Islamic contents. Additionally, our students may have to spend lots of time sifting thru the millions of results returned by conventional search engines like google.

Data mining techniques, are very powerful tools, however, they do expect the contents to be consistent to get the desired results. We intend to use data mining techniques to classify our contents (Quran, Hadith, Fiqh, Islamic Finance etc.) and tag the contents with appropriate classification for faster and more accurate indexing and retrieval. Data Mining Techniques will also provide a summary and visual representation of the contents to assist our student to comprehend the material and create a visual map of the topics.

Translation

Unfortunately, the English Islamic contents are primarily a translation from Arabic with many inconsistencies among translators. Primarily because the English language lacks some commonly used letters like “ض” or “ع” which may be translated to “A” or in some cases “U” and may be preceded by a ‘or divided into two syllabuses by a “-“. And in many cases the names may be spelled differently in the same document.

The English language also lacks lots of common Arabic terms, “خشوع”, “حياء” or “زكاة” for example.

We have built a Spell Checker utility to insure consistency among translations, or at least a produce consistent translation of Islamic Terms. The utility considers the following criteria’s for suggested spelling namely 1) How the original word sound compared to how the suggested word sounds (Phonetics) 2) How many operations needs to be performed before the original word can be transferred to the suggested word, commonly known in the field as “Word Distance”. 3) The Word Similarity.

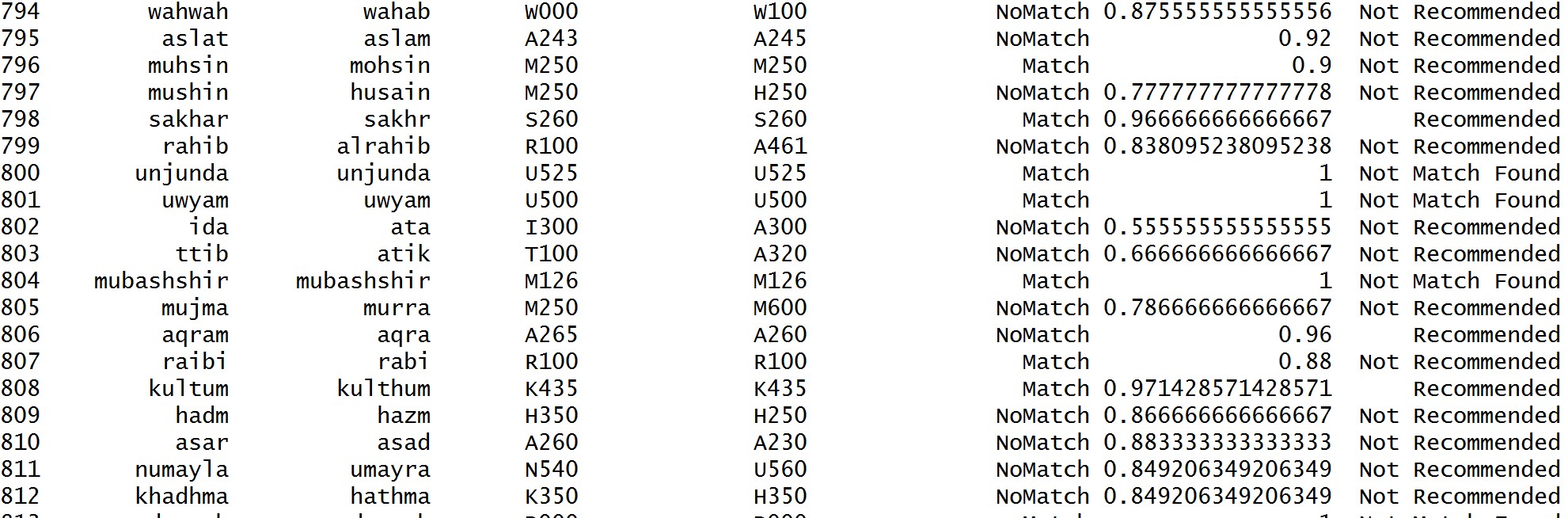

to use the full version of the filetable showing the Spell Checker Recommendation Utility output. Column 1 “Original Word”, Column 2 “Suggested Word”, Column 3: Original Word “Sound Like”, Column4: “Suggested Word Sound Like”. Column5: matching column 3 to column 4. Column 6: Similarity Distance between Original and Suggested Word, column 7: Final Recommendation.

Transliteration

Dealing with Transliteration and Arabic Names

Some of the challenges that we managed to partially resolve is the inconsistent spelling among translation for the Arabic names or Islamic terms. Islamic Material contains lots of transliteration from the Arabic language. Translators opt to transliterate in the absence of proper English translation or in cases where they cite Sacred Text like Quran or Hadith. We have manually searched for transliteration and replaced it with English translation. We developed an automated process to identify and translate the Transliteration. However, the process is not very reliable and we manually checked the material for Transliteration. The process depends on “Spell Checking” and if a word is flagged as misspelled, then that is in indication of possible transliteration.

Content Classification

We also managed to device a process where we can automatically classify contents into topics (Content Classification). I have implemented this process in a section of the contents with very promising results. We have also managed to automatically identify association between topics and structure the topic similarity into (Association Rules).

Voice to Text Engine

Previously we have successfully built an indexing engine to transfer spoken words to written text. This allows for searching and indexing of media files. It also subjects media file to the Content Classification process and Association Rules processes. we have encountered many challenges in this area that made us decide to postpone this project until further notice. some of the challenges are listed here 1- The technology selected, Namely Microsoft MAVIS - supports the English language only. 2- The English Voice content we selected, or available, is not clear or of a very poor recording quality. The Quality of recording effected the MAVIS Engine produced text. 3- Most of the English material belongs to non-native English speakers with a thick accent, this made it very hard to recognize the spoken words. 4- None native English speakers also have a tendency to miss-pronounce certain English words due to their mother tongue pronunciation of words or the lack of certain English letters in there native language. 5- Islamic Media Contents also had lots of Transliterated Phrases like - سبحان الله -, this also tremendously effected the quality of the final results.

This Wiki

We are now building a sample web site based on Web 3.0 technology namely (MediaWiki) and incorporating the intelligence mentioned above using the built in Semantic Capabilities of MediaWiki.

We have also successfully demonstrated and used the multi language capabilities using the built in features of MediaWiki.